How Transformer DecoderLayer Powers NLP Models

In the world of artificial intelligence, particularly in natural language processing (NLP) and machine translation, Transformers have become a game-changer. One of the core components of a Transformer model is the decoderlayer. Understanding how the Transformer DecoderLayer works is key to getting the best results if you’re building or optimizing a deep learning model.

In this blog post, we’ll take a closer look at the DecoderLayer, explain why it’s important, and how it operates within a Transformer model. We’ll also explore some practical applications and show you how to build one in practice, all while keeping things simple and conversational.

What is a Transformer? A Quick Overview

Before diving into the DecoderLayer, let’s briefly cover what a Transformer is. Transformers are deep learning models used in tasks like language translation, text generation, and summarization. Unlike older models like RNNs (Recurrent Neural Networks), Transformers don’t process data sequentially. Instead, they use attention mechanisms that allow them to process entire sequences at once.

A Transformer has two main components:

- Encoder: Takes the input sequence (such as a sentence) and converts it into a hidden representation.

- Decoder: Takes that hidden representation and generates an output sequence (like a translated sentence or a summary).

This architecture has made Transformers incredibly effective for tasks where understanding context across an entire sequence is crucial.

What is a Transformer DecoderLayer?

The DecoderLayer is one of the building blocks of the Transformer’s decoder. Its job is to take the representations from the encoder and generate meaningful outputs, like translating a sentence from one language to another, word by word.

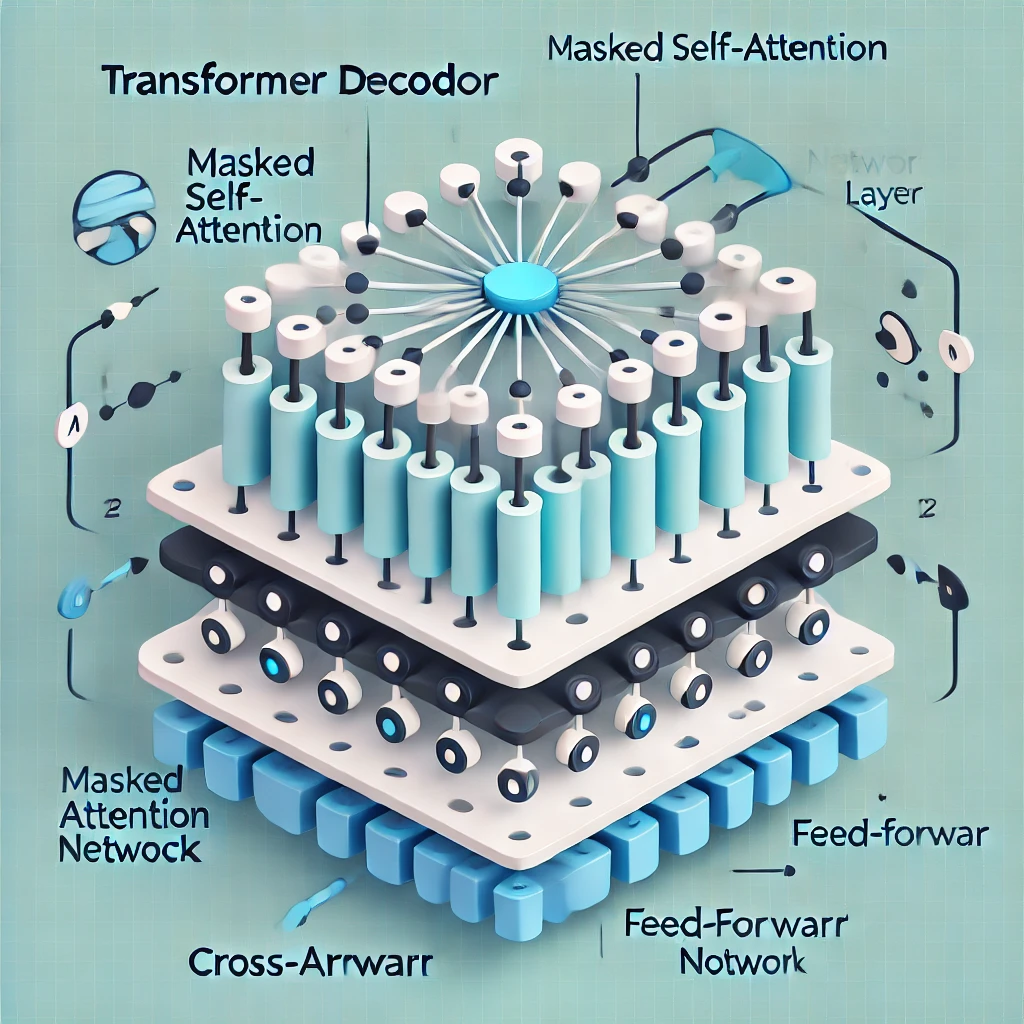

The DecoderLayer is composed of three main parts:

- Masked Multi-Head Self-Attention: This is part of the DecoderLayer that helps the model focus on previous outputs (i.e., words that have already been generated) while ignoring future tokens. For example, when generating a sentence, the model looks at words it has already predicted but doesn’t “cheat” by looking at future words it hasn’t predicted yet.

- Cross-Attention: This layer connects the decoder to the encoder. It helps the decoder attend to the most important parts of the input sequence (from the encoder) while generating the output.

- Feed-Forward Network: After the attention steps, the decoder applies a simple neural network to further process the data before moving to the next DecoderLayer.

These three components work together to ensure that the Transformer can generate highly accurate and context-aware outputs.

How Does the DecoderLayer Work?

Now that we know the parts let’s look at how the Transformer DecoderLayer works step by step:

- Masked Multi-Head Self-Attention: When generating a sequence, the decoder must focus only on past tokens (e.g., previously generated words). For instance, if a Transformer translates a sentence from English to French, the self-attention mechanism ensures the model only looks at previously translated words and not future ones. This helps maintain proper sentence structure and grammar.

- Cross-Attention: Once the model has considered its previous outputs, it then uses cross-attention to focus on the relevant parts of the input. For example, when translating “The dog is running” into French, the decoder uses cross-attention to focus on “dog” and “running” in the input to generate the corresponding French words correctly. This layer connects the information the encoder learns to the decoder’s output generation.

- Feed-Forward Network: After both attention steps, the data passes through a feed-forward network. This network helps refine the results by applying transformations to the attended data, making the output more precise.

Why is the Transformer DecoderLayer Important?

The DecoderLayer is crucial for any task that involves generating text or sequences, such as:

- Language Translation: In models like Google Translate, the decoder generates translated sentences by attending to the original input (in another language) and previous outputs.

- Text Summarization: When summarizing long documents, the decoder focuses on key parts of the original text (via cross-attention) and generates concise summaries.

- Text Generation: In models like GPT-3, the DecoderLayer generates coherent text based on a prompt. The model uses previous words to predict the next word, ensuring that the generated text follows a logical flow.

In short, the Transformer DecoderLayer helps ensure the model’s outputs are accurate, context-aware, and fluent.

How Does the DecoderLayer Differ from the EncoderLayer?

Though both the encoder and decoder are key components of the Transformer, they serve different purposes:

- Encoder: Processes the entire input sequence and learns how different parts of the sequence relate to each other. It doesn’t generate anything; it just creates a hidden representation.

- Decoder: This function generates outputs based on the encoder’s hidden representations and its own previous outputs. It uses masking to avoid looking ahead in the sequence, ensuring proper flow and logic in the output.

The key difference lies in their use of attention mechanisms: The encoder only uses self-attention, while the decoder combines masked self-attention (to focus on its outputs) and cross-attention (to attend to the encoder’s outputs).

Building a Transformer DecoderLayer: A Practical Guide

Let’s say you want to build your own DecoderLayer. Luckily, frameworks like PyTorch and TensorFlow make it easy. Here’s a simple code example using PyTorch to build a DecoderLayer:

import torch.nn as nn

class TransformerDecoderLayer(nn.Module):

def __init__(self, d_model, nhead):

super(TransformerDecoderLayer, self).__init__()

self.self_attn = nn.MultiheadAttention(d_model, nhead)

self.cross_attn = nn.MultiheadAttention(d_model, nhead)

self.ffn = nn.Sequential(

nn.Linear(d_model, d_model*4),

nn.ReLU(),

nn.Linear(d_model*4, d_model)

)

self.norm1 = nn.LayerNorm(d_model)

self.norm2 = nn.LayerNorm(d_model)

self.norm3 = nn.LayerNorm(d_model)

def forward(self, tgt, memory):

tgt2 = self.self_attn(tgt, tgt, tgt)[0]

tgt = tgt + self.norm1(tgt2)

tgt2 = self.cross_attn(tgt, memory, memory)[0]

tgt = tgt + self.norm2(tgt2)

tgt2 = self.ffn(tgt)

tgt = tgt + self.norm3(tgt2)

return tgtIn this code, we build a simple DecoderLayer with:

- Self-attention to focus on the decoder’s own outputs,

- Cross-attention to connect to the encoder’s outputs,

- And a Feed-Forward Network to process the attendee data.

You can tweak parameters like the number of attention heads or hidden dimensions to suit your task.

Conclusion

The Transformer DecoderLayer is critical in modern deep learning models, particularly in tasks like language translation, text generation, and summarization. Understanding how the DecoderLayer works—especially its attention mechanisms and feed-forward networks—can help you optimize your models and generate better results.

Whether building from scratch or fine-tuning an existing model, the DecoderLayer ensures that your model’s outputs are coherent, context-aware, and efficient. Explore and experiment with these layers to get the most out of your Transformer-based models.

1 thought on “Understanding Transformer DecoderLayer: A Simple Guide”

There was struggling to mention Every one of what is just like